In software development, performance, scalability, and concurrency are essential aspects that can influence how effectively an application functions, particularly when it needs to handle large volumes of users or data. One of the most significant techniques for improving performance is multithreading, which allows multiple tasks to be executed concurrently. However, implementing multithreading and scaling systems efficiently comes with its own set of challenges and considerations. Developers must ask the right questions to ensure that they use threads effectively and scale their systems to meet current and future demands.

In this article, we explore the critical questions developers should ask when considering the use of threads and scaling in their applications.

1. What is the Problem You’re Trying to Solve with Threads?

Before diving into multithreading, it’s crucial to first define the problem you’re trying to solve. Threads are often used to address specific issues, such as:

- Improving performance: Threads can help distribute tasks across multiple CPU cores, speeding up the execution of computationally expensive processes.

- Concurrency: If your application needs to handle many tasks simultaneously, such as handling multiple HTTP requests or performing background processing, threads allow for concurrent execution without blocking the main application flow.

- Responsiveness: In user-facing applications, using threads to handle background operations (like file downloads or network requests) can improve the responsiveness of the UI.

Understanding the problem will help you determine whether multithreading is necessary and how it should be implemented. Threads are not always the best solution for every performance bottleneck—sometimes, an asynchronous or event-driven model (like Node.js or Go) might be a better fit.

2. What Are the Available Resources?

When considering multithreading, you should evaluate the resources your system has at its disposal. Key considerations include:

- CPU Cores: Threads can run in parallel across multiple cores, but the number of cores limits the number of threads that can run truly concurrently. On systems with fewer cores, threading may not improve performance as much as expected.

- Memory: Threads share the same memory space, but creating too many threads can lead to high memory consumption, which may cause the system to swap to disk or even crash if resources are exhausted.

- Other System Resources: Consider disk I/O, network bandwidth, and other system limitations. If your threads are waiting for external resources, like database queries or network responses, you might need to rethink how you distribute work.

As you scale the number of threads, it’s crucial to ensure that your system can support the increased load without exhausting available resources.

3. How Do You Manage Thread Synchronization?

One of the most significant challenges of multithreading is managing synchronization. Since multiple threads may access shared resources simultaneously, there’s a risk of data corruption or inconsistencies. The question of how to manage synchronization is critical to the correctness and performance of your application.

- Locks: Use locks or mutexes to prevent concurrent threads from accessing shared data simultaneously. However, locks can introduce contention, where threads are delayed because they must wait for others to release the lock. Overuse of locks can significantly degrade performance.

- Atomic Operations: In some cases, atomic operations or lock-free data structures may be useful. These are operations that ensure data integrity without needing locks, often at the cost of increased complexity.

- Deadlock: A deadlock occurs when two or more threads are blocked forever, waiting for each other to release resources. You must carefully design your thread interactions to avoid circular dependencies.

- Thread-safe Data Structures: Consider using thread-safe collections or libraries, which can manage the complexities of synchronization internally.

The choice of synchronization method will depend on the complexity of the task and the performance characteristics you’re aiming for.

4. How Do You Handle Thread Lifecycle Management?

Managing the lifecycle of threads is a key consideration, especially as the number of threads increases. Improper thread management can lead to performance degradation, resource exhaustion, or even application crashes. Some important lifecycle-related questions include:

- Thread Creation: How do you create and start threads efficiently? Constantly creating and destroying threads can be expensive in terms of resources. Consider using thread pools to reuse threads and avoid excessive overhead.

- Thread Pooling: A thread pool can help limit the number of threads running simultaneously, improving resource usage. It allows for threads to be reused for multiple tasks instead of creating a new thread every time a task needs to be performed. In Java, for example, the

ExecutorServiceAPI provides convenient ways to manage thread pools. - Graceful Shutdown: How do you ensure that threads are terminated correctly? If threads are not properly shut down, they can continue to consume resources after they’ve completed their tasks. Gracefully terminating threads and ensuring that no resources are leaked is vital for long-running applications.

Choosing the right thread management strategy depends on how often threads are created and destroyed and whether you need to fine-tune thread handling for optimal performance.

5. What Are the Scalability Requirements?

Scaling a multithreaded application is more than just creating more threads—it’s about designing a system that can handle increasing loads efficiently. Questions to ask regarding scalability include:

- Vertical Scaling: Can the application scale by adding more CPU cores, memory, or other resources to a single machine? For some applications, this approach may be sufficient, but it’s not always the most cost-effective solution.

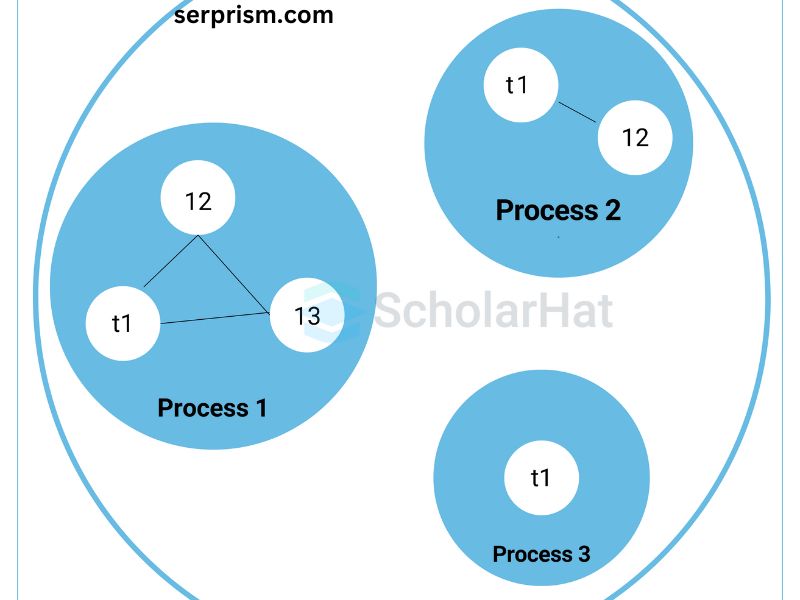

- Horizontal Scaling: Does the application need to scale across multiple machines? Horizontal scaling involves distributing work across many servers, which often involves using distributed systems techniques like load balancing, data partitioning, and replication.

- Concurrency vs. Parallelism: While concurrency (handling multiple tasks at once) can be achieved with threads, parallelism (actually performing tasks simultaneously) often requires more advanced techniques and larger infrastructure. This is especially true when scaling out horizontally.

It’s essential to consider how your threading model fits with the overall architecture of your system, particularly as it grows. Systems that rely too heavily on shared memory and tight synchronization may face challenges when trying to scale horizontally.

6. How Do You Ensure Fault Tolerance?

In a multithreaded application, fault tolerance refers to the ability of the system to continue functioning correctly even in the presence of thread failures, crashes, or unexpected behaviors. Key considerations include:

- Thread Isolation: Threads should be designed to minimize dependencies on each other. If one thread crashes, it shouldn’t affect the entire application. Techniques like error handling, isolation, and retries can be critical.

- Graceful Recovery: What happens when a thread encounters an error? Your application should be able to recover from thread failures without crashing. This might involve restarting threads or having backup systems in place to continue work when a thread fails.

- Logging and Monitoring: Use logging to track thread behaviors and monitor their performance. Monitoring can help detect issues before they affect the entire system.

Fault tolerance is especially critical in production environments, where maintaining uptime and reliability is a primary concern.

7. How Do You Measure and Optimize Performance?

Once you’ve implemented threading and scaling strategies, it’s essential to measure the performance of your system and optimize accordingly. Some important questions to consider include:

- What metrics are important? CPU utilization, thread contention, memory usage, and response times are some of the key metrics for evaluating threading performance. Tools like profilers, logging, and monitoring software can help.

- Where are the bottlenecks? Is there contention over resources like memory or CPU time? Are threads spending a lot of time waiting on locks or external resources? Identifying the root causes of performance issues will guide optimization efforts.

- Can you improve with parallelism or better resource distribution? In some cases, increasing the number of threads may not be the solution. Rather, optimizing algorithms, reducing locking contention, or utilizing better data partitioning strategies might yield better results.

Understanding how threads interact with each other and the system’s resources will enable you to optimize the application effectively.

8. Are There Alternatives to Using Threads?

In some cases, multithreading might not be the best approach for scaling or concurrency. Some alternatives include:

- Asynchronous Programming: In certain environments, asynchronous programming models (like Node.js or Python’s async/await) may provide a more efficient way to handle concurrency without the overhead of managing threads.

- Actor Models: Systems like Erlang or Akka use the actor model, where “actors” handle messages and run independently. This approach can avoid many of the complexities of traditional multithreading.

- Microservices: Instead of scaling one monolithic application with threads, you might consider breaking your application into smaller, independently scalable services.

Choosing the right concurrency model depends on your system’s requirements and trade-offs.

Conclusion

The decision to use threads and scale your system is a multifaceted one. It requires an in-depth understanding of both the problem you’re solving and the available resources at your disposal. From synchronizing threads to managing resource consumption, the questions above provide a roadmap for approaching threading and scaling in a way that maximizes performance and maintains system reliability.

By carefully considering each of these questions, developers can ensure that their applications are both performant and scalable, capable of handling increasing loads as the application grows and evolves.